Executive summary

The pace of change within the High-Performance Computing (HPC) and AI infrastructure ecosystem has been quite dramatic in recent years. Competitive CPU offerings from multiple vendors based on different ISAs, coupled with the emergence and importance of accelerated and hybrid computing with both general-purpose and purpose-built designs, have created challenges and complexities for architects and designers of leadership-class systems.

Beyond the system design challenges, many additional elements are required for the successful deployment and operation of a leadership-class machine and data center, including:

- Requirements planning for anticipated science needs

- Site planning (e.g., real estate, floor space, power, cooling)

- Advanced manufacturing

- Installation

- System integration and acceptance testing

- Ongoing operations

- Environmental and sustainability considerations

Responding to these challenges and requirements, Eviden has drawn on its heritage in advanced energy-efficient supercomputing systems, modular data center (MDC) design, and manufacturing capabilities to deliver turnkey, scalable, high-performance solutions.

Recognizing the benefits and advantages of this integrated approach, the EuroHPC Joint Undertaking’s (JU) awarded Europe’s first exascale supercomputer JUPITER (Joint Undertaking Pioneer for Innovative and Transformative Exascale Research), being hosted at Jülich Supercomputer Center (JSC), to a ParTec-Eviden consortium. As part of this EUR 500 million project, this integrated and turnkey approach was also a key factor for JSC in choosing Eviden to adapt its MDC solutions to meet JSC’s expectations and JUPITER’s requirements.

Modularity, flexibility, and performance of JSC’s and FZJ’s key scientific workloads, as well as sustainability, were critical elements of JUPITER’s requirements. As part of the ParTec-Eviden consortium, Eviden delivered the BullSequana XH3000 system and the design, construction, and delivery of the MDC for JUPITER’s multiple modules.

History of Eviden and its manufacturing capabilities

Supercomputer Heritage

For more than 90 years, Eviden has had a long history of developing leadership-class HPC-AI machines. The history includes GE mainframes and Honeywell supercomputers, which were part of Bull’s lineage. Bull was acquired by Atos in 2014 and later organized under the Eviden brand as part of the Atos Group.

Eviden’s engineering and system architecture have culminated in its BullSequana family of solutions. Supporting options for various combinations of CPU and GPU models and vendors, along with a choice of form factors and performance capabilities. The BullSequana system was developed to provide scientists, engineers, and researchers the scale of solution required for their respective HPC and AI applications and workloads, while keeping energy consumption under control.

Prior to the anticipated ranking for JUPITER in a top spot on the June 2025 TOP500 list, Eviden placed 53 systems on the November 2024 TOP500 list, including Leonardo (CINECA) at then #9 (first appearing at #4) and MareNostrum 5 (Barcelona Supercomputer Center) at then #11 (first appearing at #8).

In addition to its performance in the TOP500, BullSequana held the top 2 spots on the November 2024 Green500 list, along with the top spot in the previous June 2024 Green500.

Key to Eviden’s BullSequana top tier rankings in the TOP500 and Green500 are its direct liquid cooling (DLC) innovations and capabilities. Now a requirement for today’s advanced HPC and AI infrastructures, liquid cooling is critical for a system to operate at its peak performance while also supporting sustainability and environmental objectives. Eviden’s implementation aims to significantly reduce a data center’s carbon footprint and energy consumption.

Manufacturing

Eviden’s manufacturing facility in Angers, France was initially established in 1962. Multiple expansions and upgrades over the years were made to support a broad client and product base with flexible and versatile capabilities required by the variety of technologies developed there, including cybersecurity, enterprise servers, HPC servers and quantum emulators. Further expansion followed in 2019 with the inauguration of the Testing Lab, continuing with the reconstruction project that began in 2021 to address challenging exascale and post-exascale requirements, improve productivity, and support anticipated market growth.

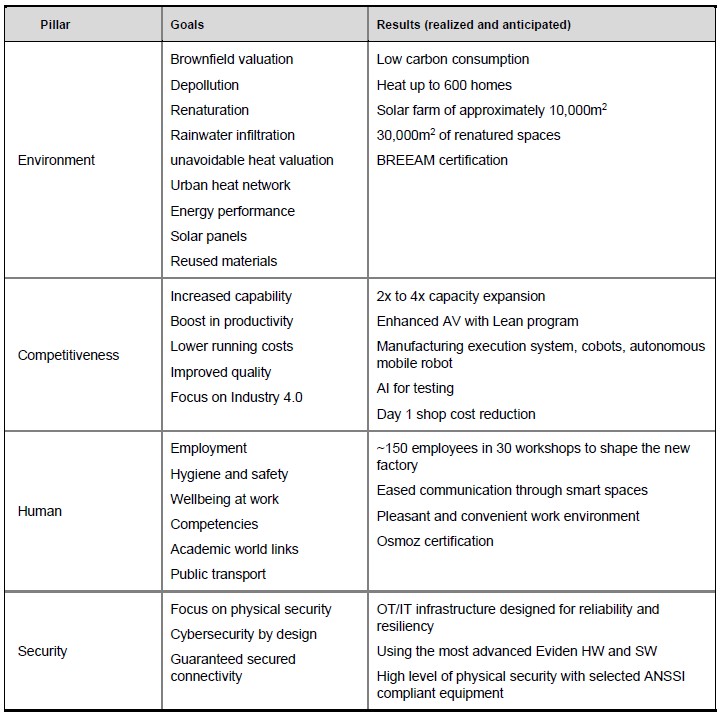

Beyond technology modernizations, goals were established for environmental sustainability, security, and optimizations for personnel safety and efficiency. Table 1 summarizes the key pillars, goals, and results (anticipated and realized to date) of the new facility’s vision.

Table 1

Angers Modern Manufacturing Facility Design Pillars

New factory AI capabilities include:

- Cobots: Designed to be worn by humans to assist in various tasks, these robotic devices are intended to help streamline and accelerate repetitive processes while still allowing individual optimizations and corrections deemed necessary by the individual wearing the device.

- AI for testing: The use of AI in testing provides a high-level of quality control and repeatability with capabilities to detect defects difficult for humans to identify, along with the ability to mitigate certain defects in real-time.

By modernizing its manufacturing, testing, and integration capabilities, Eviden is vertically integrating its world-class engineering with state-of-the-art manufacturing operations. This vertical integration could provide Eviden future competitive advantage with extra focus on and control of product quality and environmentally sustainable operations.

Eviden’s modular data center

Key to the success of JUPITER is Eviden’s innovative design and deployment of its Modular Data Center. Recognized early in the advanced facilities planning by JSC leadership, a modular datacenter could provide substantial benefits relative to a traditional on-premises datacenter facility:

- Faster time to system power-on

- Retrofitting an existing data center could take substantial time to complete, particularly if additional power must be brought in and/or modifications need to be made to support liquid cooling.

- Constructing a new building would likewise take substantial time.

- Pre-building and testing modules populated with actual servers, storage, and networking saves time, as opposed to fully assembling and integrating components on-site.

- Incremental assembly of single modules brought online and powered up on-site, lessons learned can be applied to the installation and validation of identical modules.

- Cost savings

- CAPEX related to both constructing or retrofitting an existing data center were determined to far exceed the cost of the modular data center construction-related expenses.

- Over provision floor space for unknown future requirements.

- OPEX expenses (e.g., power, cooling, insurance) for the modular data center were found to be lower than potentially underutilizing new or existing traditional data centers.

- Flexibility and expansion

- Aids in expanding existing modules by replicating proven designs.

- Accelerates the introduction of new technologies by supporting new module designs as required.

- Supports incremental floor-space expansion as needed.

Consisting of 52 pre-built and interchangeable modules, the entire datacenter was assembled and constructed in 15 months. Assembly of the production modules began in January of 2024 at Eviden’s Angers manufacturing site in France, with the final modules delivered to JSC in March of 2025.

The more than 2,300m2 turnkey datacenter consists of three different types of pre-built modules, 1 module consisting of 2 containers:

- IT modules: 7 modules (14 containers) populated to house the 125 racks that make up JUPITER and 1 empty module (2 containers).

- Power feed modules: 15 populated containers to provide the required power for JUPITER and 6 empty containers

- Logistics, support and datahall modules: More than 15 containers provide space for the lobby, workshop, warehouse, datahall and other functions.

The IT modules housing each rack of compute blades were staged and tested at the Angers facility. Once tested, the shipping container-sized modules were trucked to JSC. The compute blades from the JUPITER racks in the IT modules were removed, packaged, and shipped separately to ensure safe transport before being reinstalled on-site at JSC. The blades were fully tracked and monitored (e.g., temperature, vibrations) during shipment to further ensure their quality and reliability.

Figures 1, 2, and 3 illustrate the modules at various stages of the module integration and installation process at JSC.

FIGURE 1

Integration of JUPITER IT module in the modular data center at Eviden’s Angers site before shipment to JSC

FIGURE 2

Installation of Logistics Module at JSC

FIGURE 3

Installation of Power Feed Module at JSC

The JUPITER supercomputer

JUPITER is the JU’s current flagship supercomputer and is expected to be the EU’s first exascale computer. Envisioned with the future in mind, JUPITER’s implementation pioneered several advanced modularity concepts, including:

- Infrastructure partitions to support different existing computing capabilities while anticipating future new and novel computing approaches.

- Data center modules to minimize constructions costs and operational expenses, and to achieve faster time to power-on relative to traditional data centers.

Key Eviden phases of the JUPITER development included:

- Procurement: Securing and executing the contract with the JU.

- Design: Collaborations between hardware background and innovative MDC design expertise with design support and on-site integration capabilities.

- Manufacturing: Manufacturing of the system from ground up and integration of all components in the racks at Eviden’s factory.

- Deployment: Pre-integration in the MDC at Eviden’s Angers factory before final installation at JSC and on-site setup.

Elements of JUPITER’s architecture evolved from a set of EuroHPC-funded initiatives with strong contributions from Eviden and JSC. Specifically, the Software for Exascale Architecture (SEA) sub-projects (DEEP-SEA, IO-SEA, and RED-SEA) served as blueprint architecture for highly efficient and scalable heterogeneous exascale HPC systems. The three projects collaborated on system software and programming environments, data management and storage, and interconnects.

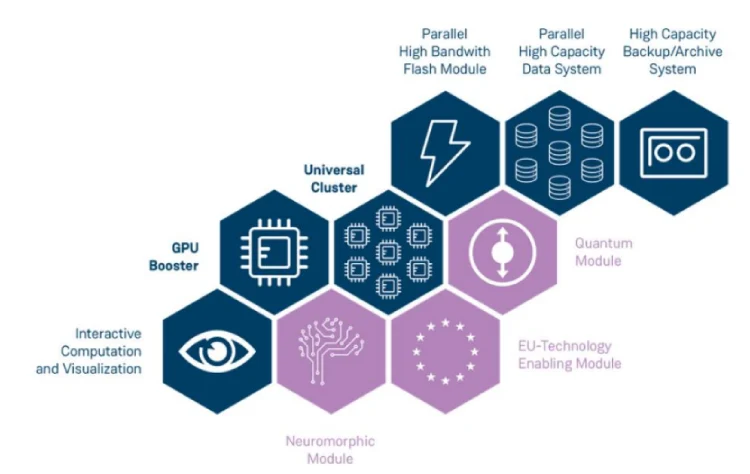

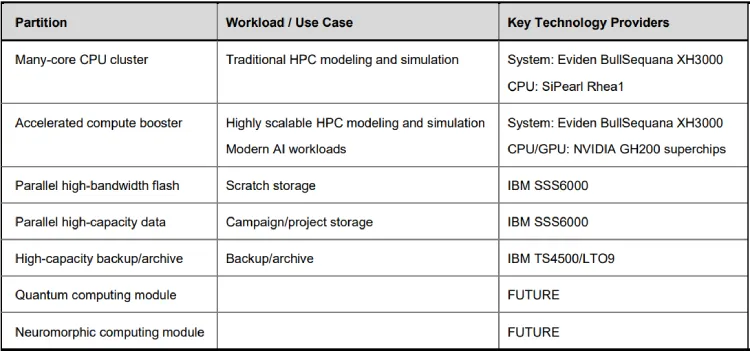

JUPITER’s modular design, with cluster and booster partitions realized by Eviden’s BullSequana XH3000 product family, is architected to support diverse scientific workloads ranging from traditional HPC modeling and simulation workloads needing large-scale, many-core CPU nodes, to modern AI workloads needing a large-scale configuration of GPU-accelerated nodes with high-bandwidth, low latency HBM memory. Other modules planned in the phased roll-out of JUPITER include partitions for storage, quantum computing, and the initial installations of the SiPearl Rhea CPU, developed as part of the European Processor Initiative (EPI).

Connecting the modules is a 400 Gb NDR NVIDIA Quantum-2 InfiniBand NDR network, implementing a DragonFly+ topology. The Booster module is interconnected with NVIDIA Quantum-2 InfiniBand networking. This high-performance network connects 25 DragonFly+ groups within the Booster module, as well as two additional groups for the Cluster module, storage, and administrative infrastructure.

Figure 4 depicts the JUPITER architecture and Table 2 summarizes each initial partition, use case, and key respective technology provider.

FIGURE 4

JUPITER Modular Architecture

Table 2

JUPITER Modular Partitions

Future outlook

With the pace of change across all dimensions of the advanced computing ecosystem (e.g., performance, incorporating AI, CPU types, GPU types, storage technologies, power generation and consumption, cooling technologies, environmental sustainability) being broad and dynamic, planning for researcher needs and anticipating future technologies is a daunting task. The status quo of planning for incremental evolution from generation to generation within existing power, cooling, and floor space infrastructure will no longer hold.

Future technology requirements need to anticipate both incremental evolution of existing technologies and novel revolutionary innovation with the flexibility to adapt and not be constrained by past, outdated approaches. Research sites and vendors that do not incorporate modularity in both system architecture and facilities planning may find it difficult to maintain research excellence and leadership in their HPC-AI solutions. This is highlighted by the rapid rate of change in new GPUs, with new generations expected to appear every 12 months.

Planning for a flexible, modular future cannot succeed from a single entity or in a vacuum. Intentional partnerships and collaborations between like-minded progressive institutions is required to fully realize substantial benefits. Should JUPITER satisfy the goals set forth by JSC and the JU, organizations and institutions with similar aspirations should be encouraged to emulate the model established by the cooperation between JSC and the ParTec-Eviden technology consortium.

Copyright 2025 Hyperion Research LLC and Eviden