What we offer

We empower organizations to accelerate their AI applications through flexible, scalable architectures that ensure exceptional performance and extendibility. Eviden’s AI solutions combine advanced computing with energy-efficient systems, helping enterprises enhance their AI initiatives while reducing carbon emissions. Our offerings are tailored for AI applications, especially large language models (LLMs) and numerical simulations, utilizing our extensive consulting experience and infrastructure to optimize and accelerate model performance.

Our products

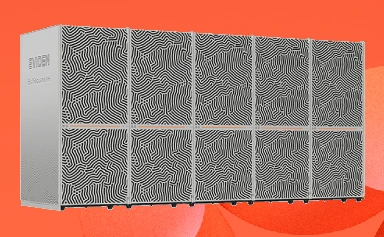

BullSequana XH3500

BullSequana XH3500 is the next-generation supercomputer for AI factories and advanced simulations.

Midrange servers for High Performance AI

Leverage exceptional computing capabilities and strike a balance between cost and performance with the BullSequana AI mid-range server family.

AI SuperCluster

AI offering based on the NVIDIA GB200 NVL72 designed to enhance the training and inference of next-generation large language models (LLMs).

Our services

Consulting Services

Eviden empowers businesses to streamline their entire AI workflow. From initial data exploration and understanding model outputs, to rapid deployment and performance evaluation, Eviden’s high-performance computing accelerates every stage. This translates to faster time-to-insight and a significant boost in overall AI development efficiency.

Seamless Experience

Eviden is committed to delivering a seamless experience with our high-end supercomputers. We will handle the delivery, installation, and configuration of your supercomputer, ensuring it is ready to meet your most demanding computational needs. Additionally, we provide ongoing maintenance and technical support throughout the entire lifetime of the system, guaranteeing optimal performance and reliability.

Who uses our products and services

Use cases

Adapt and customize models according to specific business use cases.

Support Large Language Models (LLMs), ensuring optimal performance and scalability.

Identify the exact value that AI can bring to existing simulations and leverage the most optimal infrastructure.

Related resources

Get in touch!

Connect with us to unlock the potential of High Performance AI.