What is artificial intelligence?

Artificial intelligence (AI) is a category of technologies by which computers and machines simulate human intelligence and behaviors. It solves complex problems and executes tasks with remarkable speed and accuracy. In limited areas, it significantly outperforms human capabilities.

AI is developing rapidly, thereby transforming industries by enhancing efficiency, productivity, and performance. But it’s a long way from being able to think and act like humans do.

How artificial intelligence works

At the heart of AI are decision-making and learning algorithms plus a lot of data. It’s like teaching a computer to think and learn, so it gets better and better at doing its job. This synergy allows AI systems to replicate human cognitive functions, including learning, reasoning and decision-making. Understanding how artificial intelligence works involves several key concepts:

Data hoarding: AI is a bit of a data hog. It gobbles up info from everywhere – words, pictures, videos — you name it.

Pattern spotting: It’s got an eye for details, finding patterns in the data that humans might miss.

Learning the ropes: Just like us, AI needs practice. It learns from data to help it make smart guesses and decisions.

Making the call: Once it’s up to speed, AI can make predictions or choices based on new input it hasn’t seen before.

Never stops learning: The cool part? Continuous learning is at the core of any sustainable AI system. AI keeps learning, getting sharper and more on point over time.

Types of artificial intelligence

AI can be categorized into different types based on their capabilities and complexities: narrow AI, strong AI and generative AI (GenAI).

Narrow AI: This type is a one-trick pony; really good at one specific thing, like being your personal DJ or keeping spam out of your inbox. Examples include:

- Voice assistants such as Siri or Alexa, which understand and respond to user commands.

- Recommendation systems used by platforms like Netflix and Amazon to suggest movies or products.

- Spam filters to identify and block unwanted emails.

- Autonomous vehicles like self-driving cars that process data from multiple inputs to make decisions and execute tasks on the road.Narrow AI is prevalent in today’s technology landscape because it provides targeted solutions effectively and efficiently.

Narrow AI recommends movies based on what we watch and like

Strong AI: Now, this is the big dream – an artificial intelligence that’s as smart as a human and able to learn and adapt to anything you throw at it, hopefully without being scary and taking over the world. Examples of it today are mostly in the laboratory, but one day we’ll hear about it in the real world.

Generative AI: This creative genius can generate new stuff, like writing a poem, designing a dress or planning an effective marketing strategy.

The introduction of transformer technology, a deep learning architecture, has significantly enhanced GenAI’s capabilities, enabling tools like:

- ChatGPT for generating human-like text and conversations.

- Google Gemini and Amazon Bedrock for creating diverse types of content.

- Design tools, like those offered by Meta Llama and startups like Hugging Face.

GenAI is not just limited to content generation. It also reviews and refines its outputs. Key applications of this include:

- Conversational user assistance to provide context-aware responses for customer service or virtual assistants.

- Content creation and curation, to generate and refine content like articles, images and videos.

- Software development, assisting in code generation, testing and automation.

- Strategic ideation, designing engineering components, new drugs or architecture plans.

Why did the narrow AI go to school?

To learn how to become a strong AI and finally understand the intricacies of artificial intelligence technology!

The evolution and history of artificial intelligence

The journey of AI technology is marked by significant milestones that have shaped today’s innovations. Let’s take a look.

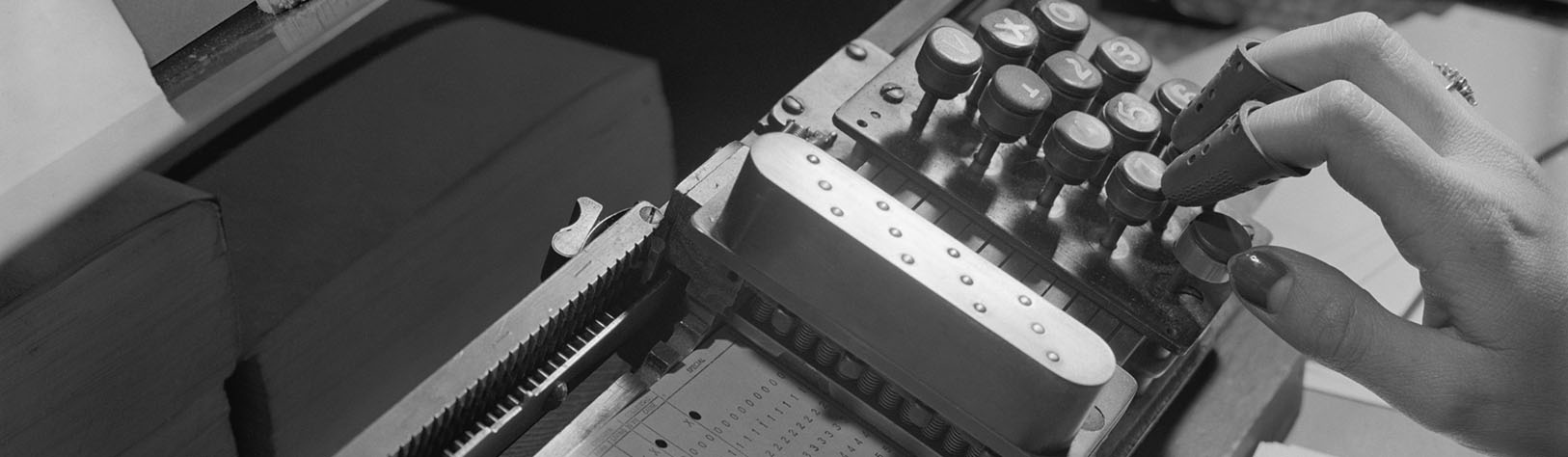

Processors used to be people

Early beginnings

1950s

The concept of artificial intelligence was born, with pioneers like Alan Turing and John McCarthy laying the groundwork. According to the famous Turing test, “A computer would deserve to be called intelligent if it could deceive a human into believing that it was human.”

1960s-70s

Early AI research focused on symbolic AI and problem-solving, leading to developments in basic natural language processing and simple game-playing programs. This is when the concept of knowledge graphs was born.

1970s-80s

Overpromising by early AI researchers led to reduced funding and interest, a period known as the AI winter. Despite setbacks, foundational work in machine learning and neural networks continued, setting the stage for future breakthroughs.

It’s important to note that the computers of this age simply didn’t have the computational processing power to handle the complex algorithms required for advanced AI. Today’s AI benefits greatly from modern CPUs and GPUs, which were unimaginable back then.

Machine learning and data explosion

1990s-2000s

Advancements in computational power and the rise of the internet provided unprecedented amounts of data. This enabled significant breakthroughs in machine learning, such as IBM’s Deep Blue defeating chess champion Garry Kasparov in 1997.

Modern AI revolution

2010s-today

The field of artificial intelligence has seen rapid acceleration. Key technologies like deep learning and neural networks have matured, supported by advancements in hardware (GPUs) and the availability of big data.

Deep learning: Also known as neural networks, deep learning involves machine learning models with multiple layers that automatically learn from raw data. This has significantly improved tasks like image and speech recognition.

Natural language processing (NLP): Technologies like GPT and BERT have revolutionized how machines understand and generate human language.

Why did the NLP model break up with the deep learning model?

Because it couldn’t find the right words, and deep learning was always too deep in thought!

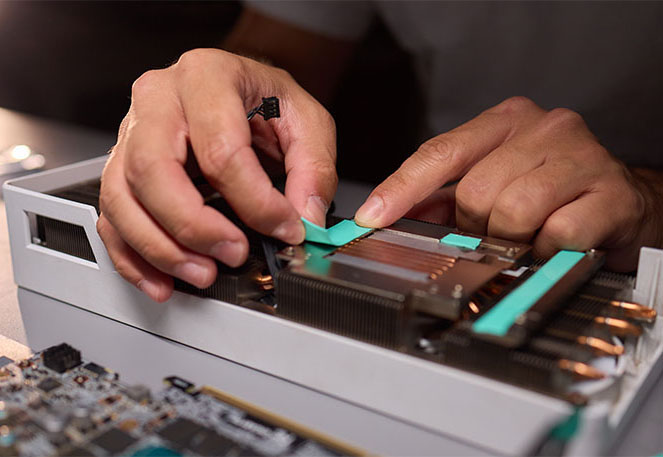

The modern tech powering AI

Imagine you’re trying to choose between two types of turbo-charged engines for your tech project, each designed to handle big jobs but in different ways. These engines are called GPUs and NPUs, and they’re super important to the modern AI revolution.

What’s a GPU?

Graphics processing units (GPUs) are specialized processors that were originally made to handle graphics like the ones in video games. GPUs can do many things at once, making them great for tasks that involve a lot of data. Here are some examples:

Parallel processing

Think of GPUs as the ultimate multitaskers. They handle lots of tasks simultaneously.

Versatility

You can use GPUs for many things, not just AI, like graphics rendering and scientific calculations.

Widely used

GPUs have been around for a while and are popular in AI tasks, thanks to big players like NVIDIA.

GPUs are specialized processors originally made to handle graphics

NPUs integrate nicely into mobile devices

What’s an NPU?

A neural processing unit (NPU) is like having a specialist in the room. It’s a processor designed specifically for artificial intelligence tasks, making it extra efficient at things like recognizing images and processing language.

Task-specific

NPUs are fine-tuned for neural network tasks, which are the backbone of many AI applications.

Energy efficient

They use less power than GPUs, making them perfect for mobile devices and other gadgets.

Integrated

You’ll often find NPUs built into modern devices like smartphones and smart home gadgets because they’re great at what they do without draining the battery.

What’s a data lakehouse, and why should you care?

Imagine having a magical storage space where you can keep all your stuff — whether it’s books, clothes, gadgets or random knickknacks — organized and easy to access. That’s kind of what a data lakehouse is, but for data!

Challenges of using artificial intelligence in modern enterprises

Implementing AI in modern enterprises comes with a set of big challenges. All of which need to be bypassed to fully realize the potential of AI technology.

Integrating GenAI into legacy systems is a daunting task, especially if you have been sitting on your tech debt. Just think of all those banks still running mainframes! Many enterprises experience more complexity in their GenAI projects than they initially expect. Issues like data silos and inconsistencies can directly impact AI’s performance and the accuracy of its results. What’s more, some GenAI vendors require data to be converted into specific formats or necessitate dedicated infrastructures, adding another layer of complexity.

Building trust and ensuring transparency are paramount when adopting AI technologies. Here are some reasons why.

Closed-source models: Enterprises should be cautious about closed-source models, where internal workings remain opaque, leading to unpredictable behaviors and inappropriate results, such as hallucinations.

In February 2024, a major airline was sued for the incorrect content its chatbot shared with a passenger.

Guardrails and filters: While vendors provide guardrails or filters, these are only partially effective. Users usually find ways to circumvent these protections, posing a risk for enterprises. Nobody wants to be in charge of a rogue artificial intelligence.

Demand for open models: Open or open-source models, for which demand is growing, allow greater control and fine-tuning according to specific needs, enhancing trust and transparency.

Cybersecurity: AI can inadvertently amplify security risks. For example, GenAI can facilitate large-scale criminal operations and create more convincing deceptions; notably deepfake voice or video attacks, which are particularly concerning for sectors like banking.

Regulatory challenges complicate AI implementation. Here’s why:

Regulatory initiatives: New laws and policies such as the UN AI Advisory Body, the AI Executive Order in the US and the EU AI Act are still under development, potentially impacting AI implementation. Today most legislation is focused on regulating the safety, transparency and traceability of AI development.

Sovereignty concerns: Geopolitical factors, like the US and China’s increasing embargoes on each other’s technology, complicate international business operations. Enterprises must navigate these complexities to determine the viability of AI technologies across different regions.

Six priorities to explore in AI implementation

To navigate these challenges and harness AI’s full potential, enterprises should focus on the following priorities:

Be bold

Have ambitious goals and identify what you aim to achieve. The speed of change is accelerating, so having a clear and specific objective can help you influence stakeholders and achieve breakthroughs faster.

Data, data, data

Reassess and optimize your data strategies. Artificial intelligence is only as effective as the data it is trained on. If all your data is in Excel, that’s not going to work. Modernize data architectures with strategies like data fabric and data mesh, and consider technologies like data lakehouses for unified access and governance.

Stay agile and open

Engage with open-source communities that compete and collaborate with traditional independent software vendors in AI and GenAI. Open-source models offer customization and accelerate technological progress, emphasizing the power of open innovation.

Accelerate value-driven experimentation

Focus on business outcomes rather than just technology. Experiment with AI applications to optimize the outcome/cost/performance ratio. Balance the need for powerful models with practical business results.

Integrate AI into your processes and IP

Leverage AI as an integral part of your data, workflows and solutions. Collaborate with AI integrators to be ready for opportunities across the information systems stack and along the HPC-to-cloud-to-edge continuum. (The term “Cloud-to-Edge Continuum” refers to the seamless integration and interaction between cloud computing resources and edge computing infrastructure.)

Responsible AI strategy

Create a culture of responsibility by engaging diverse stakeholders and legal counsel in developing a responsible artificial intelligence strategy spanning business, technology and legal domains. Four must-have topics to address are as follows:

- Understanding and mitigating risks

- Ensuring transparency and explainability

- Addressing fairness and bias

- Maintaining accountability and privacy

AI has arrived

AI is here, and it’s as transformative as the internet was. Nobody wants to be left behind.

The evolution of artificial intelligence from its early conceptions in the 1950s to modern-day innovations highlights AI’s transformative potential. However, the implementation of AI in enterprises comes with significant challenges, including integration difficulties, trust and transparency issues, cybersecurity risks, and complex regulatory landscapes. By adopting a strategic approach and focusing on bold, data-driven and responsible AI practices, enterprises can overcome these challenges and unlock the immense potential of AI technology. As artificial intelligence continues to evolve, understanding its capabilities and navigating its complexities will be crucial for staying ahead in the competitive landscape.

Learn more about Eviden’s Artificial Intelligence, Machine Learning, and Cognitive Services, as well as our BullSequana AI infrastructure solutions

Discover how Eviden’s GenAI Acceleration program can help you harness the full power of Generative AI