In 2023, the spotlight was on Artificial Intelligence (AI), as OpenAI’s ChatGPT captured the imagination of the mainstream. Suddenly, AI emerged as the new keyword king, surpassing other tech buzzwords in Google searches. But let’s be clear, the fascinating world of Large Language Models (LLMs), such as ChatGPT, is just the tip of the AI iceberg, and the journey didn’t just kickstart in 2023. It traces back to 1950 when Alan Turing introduced the world to the concept of machine thinking with his groundbreaking paper, “Computing Machinery and Intelligence.”[1] Today, our journey veers away from AI’s historical paths to explore its innovative applications in the realm of cybersecurity.

This article aims to unravel the concept of System 2 thinking, dive into its application through multi-agent systems, and explore their potential roles in strengthening offensive and defensive cybersecurity strategies.

Delving into System 2 Thinking

This term was introduced by psychologist and Nobel Laureate Daniel Kahneman. In his book Thinking, fast and slow,[2] Kahneman delves into the dynamics of our cognitive processes, classifying them under two distinct systems:

- System 1 operates automatically and quickly, with little or no effort and no sense of voluntary control.

- System 2 allocates attention to the effortful mental activities that demand it, including complex computations. The operations of System 2 are often associated with the subjective experience of agency, choice, and concentration.

This profound understanding has found its application in the world of AI, particularly within LLMs like ChatGPT and Gemini. LLM’s marvels demonstrate an incredible prowess in mimicking System 1’s quick and intuitive thinking. But, they are still on the path of mastering the intricate and reflective thinking of System 2. Recently, however, a few interesting ideas of System 2 thinking applications have emerged.

The following ideas address System 2 thinking in various ways.

Meta’s System 2 Attention[3] aims to regenerate user prompts by focusing on relevant points. The Chain-of-Thought4 approach bases reasoning on a tree of ideas, from which the system selects the best option. In this article, we will focus on another method that tackles System 2 thinking — multi-agent systems[5][6].

The rise of multi-agent systems

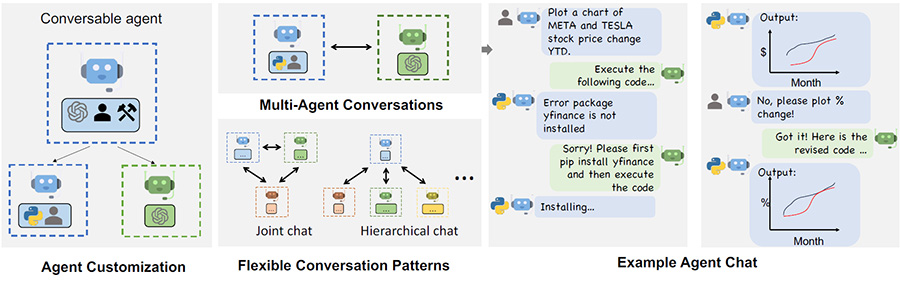

These innovative systems are composed of several autonomous entities, known as agents, capable of interacting and collaborating in a myriad of ways. From simple joint efforts and team collaborations to intricate hierarchical structures or even dynamic interactions reminiscent of a student challenging a teacher, the versatility of these agents is profound.

Despite each framework leveraging the same foundational concept, they differ significantly in their capabilities and complexities. For example, CrewAI excels in managing straightforward workflows, while AutoGen demonstrates a sophisticated approach to many kinds of organizations. The capabilities of these single agents are bound only by our creativity and technical prowess, enabling them to perform a vast array of tasks from leveraging APIs and executing code, to accessing terminals and summarizing texts.

With this foundational understanding, let’s delve into how these remarkable systems can impact cybersecurity.

Disclaimer: The concepts mentioned below are extremely simplified and do not take aspects like legal issues or misuse of responsibilities into consideration.

Setting up a secure defense system

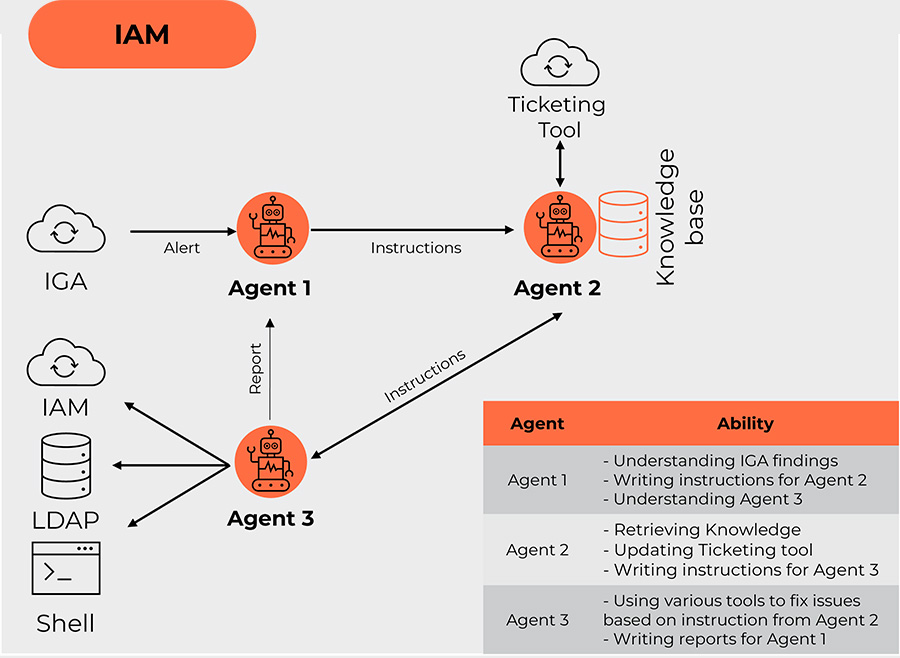

Scenario 1: Coupling IAM with IGA

In our first scenario, we explore the effective collaboration between Identity and Access Management (IAM) and Identity Governance and Administration (IGA) systems, facilitated by three key agents.

Here’s the breakdown of the activities in Figure 2:

- The IGA system generates an alert (i.e. due to organizational structure change, some identities have unnecessary roles).

- Agent 1 picks up and “understands” the alert, and based on the provided data, formulates instructions for Agent 2.

- Agent 2 uses its knowledge base of use cases to create an incident in the ticketing tool and formulates work instructions and a ticket number for Agent 3.

- Agent 3 executes instructions provided by Agent 2 and sends a report to Agent 1.

- If the operation is successful, Agent 1 instructs Agent 2 to update and close the ticket.

- If more action is needed, Agent 1 instructs Agent 2 to update the ticket and, based on knowledge base, formulates a new instruction for Agent 3.

This simple workflow can, in theory, automate most of IAM data quality and compliance tasks.

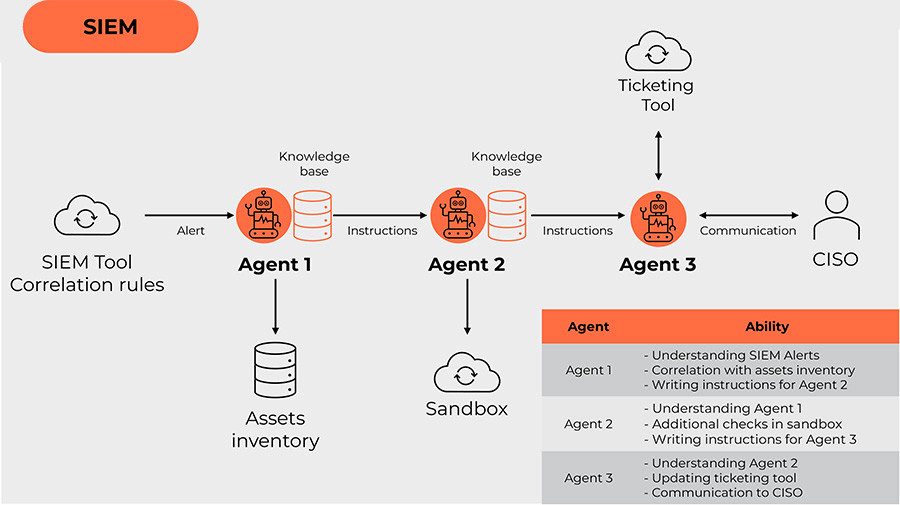

Scenario 2: Leveraging SIEM tools

This scenario is dedicated to the security information and event management (SIEM) use case. Agents will collaborate with the SIEM tool, ticketing tool and CISO to manage correlation rules alerts.

Here’s a breakdown of the activities in Figure 3:

- The SIEM tool generates an alert for suspicious action found in the log files.

- Agent 1 picks up and “understands” the alert, based on provided data, using knowledge base check if given scenario matches with assets inventory. If yes, Agent 1 writes instructions for Agent 2.

- Agent 2 uses instructions provided by Agent 1 and tries to recreate the action (i.e. checks a suspicious link in an isolated sandbox environment). If this action confirms the risk, Agent 2 writes instructions for Agent 3.

- Agent 3 opens and maintains a ticket in the ticketing tool and has a natural language conversation with the CISO via agreed communication channels.

This workflow can automate some SIEM use cases and ensure immediate reaction to risk.

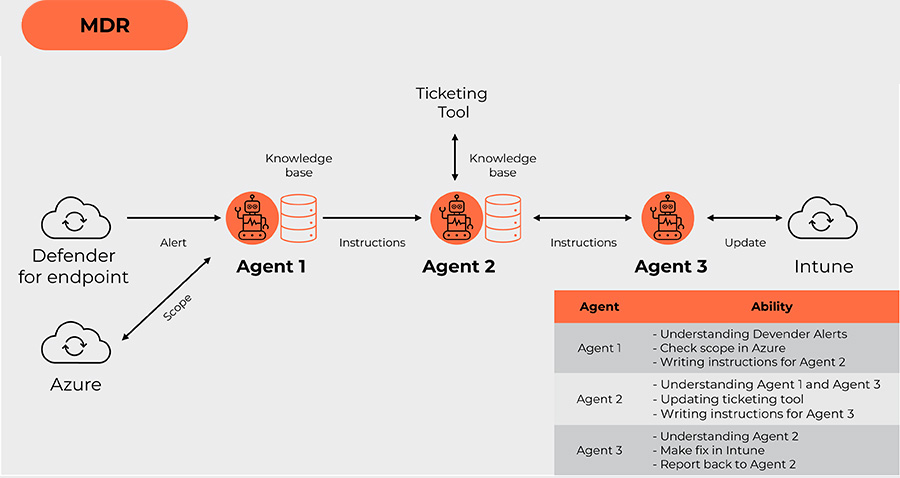

Scenario 3: Managing a MDR workflow

In this scenario, we will look at a potential managed detection and response (MDR) use case where three agents will work with Defender for endpoint, MS Azure, Intune and Ticketing tool.

Let’s look at a breakdown of the activities:

- Defender for endpoint generates an alert (i.e. some of endpoints detached from Defender).

- Agent 1 picks up and “understands” the alert based on the data provided. Using a knowledge base check in Azure, Agent 1 checks if provided assets are in scope. If yes, Agent 1 writes instructions for Agent 2.

- Agent 2 opens and maintains a ticket in the ticketing tool and writes an instruction for Agent 3.

- Agent 3 executes instructions provided by Agent 2 and sends the report to Agent 2.

- Agent 2 updates the ticket according to instructions provided by Agent 3.

This is only one of many potential examples of how MDR support functions can be automated.

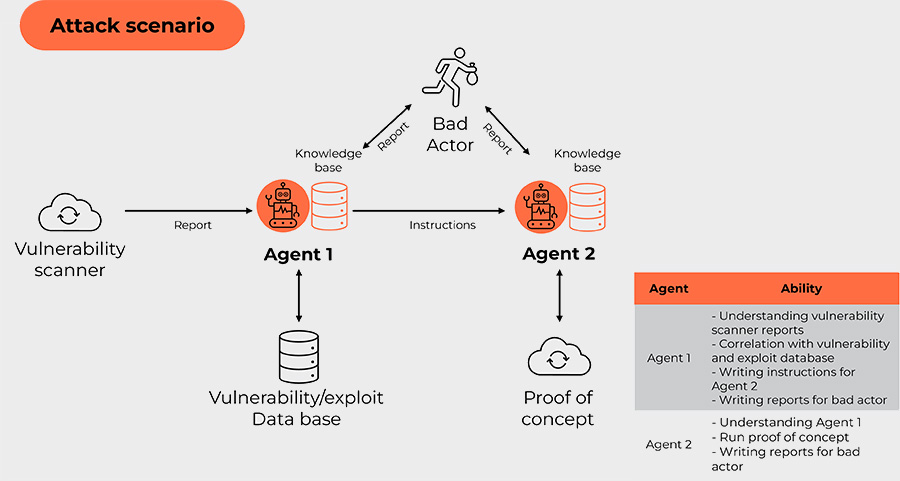

What about when under attack?

In the last scenario, we look at potential use of multi-agent solutions by bad actors. In this scenario, 2 agents work with a vulnerability scanner, check the findings and even work on a proof of concept.

Here’s a breakdown of the activities:

- A vulnerability scanner generates a report from the victim environment scan.

- Agent 1 picks up and “understands” the report based on provided data. Using the knowledge base, it checks for vulnerabilities and exploit the database and assesses exploitability of the findings.

- Agent 1 can report directly to bad actor and/or write instructions for Agent 2.

- Using the knowledge base, Agent 2 can create a proof of concept of exploit, with usage instructions and report to the bad actor.

This is only one of many cases where a multi-agent system can be used for supporting bad actors.

The question of cost

At the time of writing this article, the described workflows with the GPT-4 turbo preview can cost anywhere from a fraction of a Eurocent to several Euros. The cost depends on factors such as the number of interactions among agents, the total number of agents involved, and the volume of tokens generated and used.

This can be easily reduced by differentiating language models per agent – i.e. GPT-4 can be used only for end-user interaction and we can use the much cheaper GPT-3.5 for agent-to-agent interactions. In some cases, even an open source LLM model like Mistral can be considered. Additionally, implementing advanced methods for tweaking token outputs can further reduce costs. Well optimized workflows should not exceed a few Euro cents per run.

Knowing limitations and ethical considerations

Harnessing the power of theoretical systems in the realm of Artificial Intelligence (AI) presents a transformative potential for cybersecurity practices. These advancements promise efficiency and innovation in how we protect our digital assets. However, as we navigate this new terrain, it’s crucial to acknowledge and address the inherent limitations that come with such technological leaps.

Here, we delve into these critical considerations, ensuring our journey toward AI integration is both responsible and informed:

- Poorly constructed systems or those encountering edge cases can inadvertently trigger what’s known as agent discussion loops. Such loops can exponentially increase the usage of LLMs, spiraling into unexpected financial burdens due to the significant costs associated with high LLM usage.

- Granting AI agents direct access to production systems, as seen in the use cases of IAM or MDR, harbors the risk of extensive damage in the event of an agent’s failure. Such agents may inadvertently cause system-wide disruptions, and hence underscores the need for rigorous testing and safeguarding measures.

- Unlike their human counterparts, AI agents lack legal recognition as entities, making the investigation and accountability for harm or malpractice a complex issue. This gap in legal recognition poses challenges in addressing the consequences of actions initiated by AI agents, highlighting a need for new frameworks of accountability.

- In the near term, the automation and efficiencies gained from AI integration are anticipated to elevate cybersecurity experts to tackle more complex and strategic challenges. However, a long-term perspective reveals the potential for significant shifts in employment dynamics within the sector. Automation, while unavoidable given AI’s rapid advancement, may lead to a restructuring of the cybersecurity workforce, underscoring the importance of adaptive strategies to manage these transitions.

The inevitability of AI and automation

AI research and development is now on an exponential curve, with each innovation spurring the pace of progress even further. New discoveries and products are in the news weekly, rendering the future of this field excitingly unpredictable. However, at the turn of 2023 – 2024 several big AI players such as OpenAI, Microsoft, Dragonscale or Scalian began to herald the multi-agent approach as a promising and cost-efficient method for constructing sophisticated AI systems.

The workflows discussed in this article are conceptual blueprints awaiting refinement for real-world application. Yet, they underscore the principles of employing multi-agent LLMs, and reveal a cost-effective pathway to enhancing automated cybersecurity measures. Additionally, they highlight the potential to reallocate human expertise towards more critical oversight and advanced tasks.

Finally, besides business, it is really exciting to be an eyewitness of historical changes in this new technological revolution. Cybersecurity and in general, the IT environment, will change dramatically in the next 5 years. It’s an extraordinary time to be part of this historical shift, and it behooves us all to ready ourselves for the transformations that lie ahead.

References and sources

[1] Turing, Alan (October 1950), “Computing Machinery and Intelligence”

[2] Kahneman, Daniel (2011), “Thinking, Fast and Slow.” New York: Farrar, Straus & Giroux.

[3] System 2 Attention (is something you might need too) (2023), arXiv:2311.11829

[4] Chain-of-Thought Prompting Elicits Reasoning in Large Language Models (2022), arXiv:2201.11903

[5] Weiss, Gerhard (1999) Multiagent Systems A Modern Approach to Distributed Modern Approach to Artificial Intelligence (1999)

[6] Wooldridge, Michael (2002) “An Introduction to MultiAgent Systems”

[7] AutoGen: Enabling Next-Gen LLM Applications via Multi-Agent Conversation (2023), arXiv:2308.08155

[8] https://github.com/yoheinakajima/babyagi