As we navigate through a transformative area with the widespread adoption of generative AI, we are simultaneously confronted with escalating cybersecurity challenges. Recent trends indicate a significant 75% year-over-year increase in cyberattacks leveraging these technologies, with 85% of these incidents directly tied to the advent of generative AI.

A McKinsey survey reveals that 53% of participants consider cybersecurity a major risk when adopting generative AI. Furthermore, according to an IBM industry survey, an overwhelming 94% of executives have reiterated the importance of securing AI solutions before their deployment.

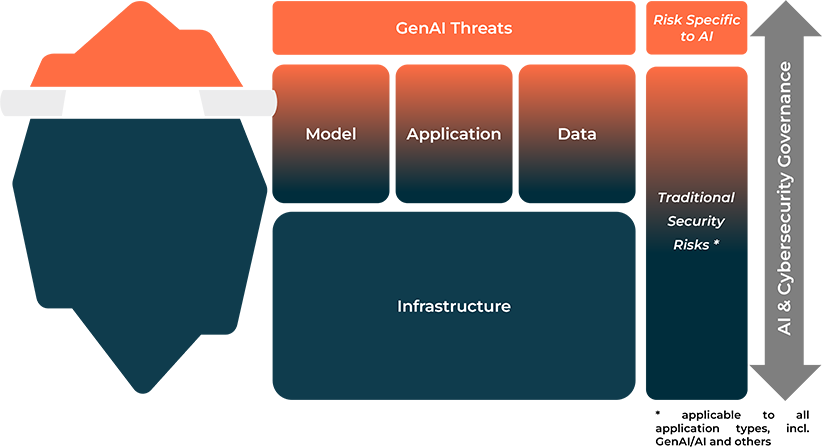

The invisible components of the AI iceberg

Organizations adopting generative AI often focus on specific threats, as highlighted in one our previous Eviden articles on GenAI threats. This focus overlooks the broader security, privacy needs of the underlying platforms. These constitute the substantial, unseen portion of the AI iceberg depicted in the image below.

While it’s critical to tackle specific threats posed by generative AI, majority of security risks actually stem from the foundational components of the AI ecosystem: the infrastructure, applications, data, and the models themselves.

Ensuring the cohesion of these core components, along with effective organizational governance, will address the lion’s share of security challenges.

In this article, we emphasize on why organizations should prioritize underlying security measures of the AI ecosystem. It is crucial to get the basics right, even if an organization is not yet equipped to defend against or detect attacks tailored specifically to GenAI.

Protecting infrastructure components

The largest part of the AI iceberg is made up of the infrastructure components. Securing this element is substantial for the other components that build on top of the infrastructure. As many AI systems build on top of cloud providers like Microsoft and Google, it is important to understand the shared responsibility model particularly for cloud platform services (PaaS) and software services (SaaS). For this purpose, the French Cybersecurity Agency (ANSSI) has proposed a shared responsibility model specific to AI systems. This model helps determine responsibilities in various scenarios like full SaaS deployment versus subcontracting only the training or production environment or full internal deployment.

Organizations need to define security roles between providers and customers. The focus should be on relevant security practices of the cloud service model like configuration management and locking down access. Strong access and authentication controls protect cloud-based data storage used by generative AI applications. This reduces the risk of unauthorized access or manipulation that could lead to biased or malicious outputs.

Additionally, comprehensive security monitoring across all infrastructure (testing and production) is important to detect suspicious activity like unauthorized login attempts or unusual data access patterns. Security operations teams need to identify and report detections specifically aimed at AI applications and need to leverage frameworks like MITRE ATLAS that can help them with real-world instances of attacks and leading response techniques.

Also, an inventory management for core AI components is relevant. For example, a dedicated model registry acts as a central library for AI models, tracking different versions and their training data lineage to prevent data poisoning. This enables the identification of outdated models or missing data dependencies, which helps protect AI infrastructure components.

Guarding application components

Application security is a fundamental aspect of the AI ecosystem. It’s important to have controls in place to protect against traditional attacks like the OWASP Top 10, as well as AI-specific risks such as prompt injection and training data poisoning.

Application protection involves managing model access in two main ways:

- Control who can access the AI, particularly roles like AI developers and system administrators

- Ensure robust security measures like multi-factor authentication and role-based access with least privilege to prevent unauthorized access.

Additionally, it includes monitoring the AI’s access rights to detect and prevent misuse and data theft, using continuous monitoring and analytics on user and entity behavior.

Application security controls also incorporate secure software development practices such as API security and user behavior monitoring. Generative AI applications need to have a backup and restore processes in place, including cover data, model weights, and application versions. A backup and restore allows rapid recovery to an early non-tampered version if data poisoning or malicious model updates are detected.

Defending data Components

Data is the backbone of the AI ecosystem. Therefore, an organization must adopt comprehensive data governance and data lifecycle management for all AI systems. Integrating training data into an organization’s data governance framework is essential for effective data sourcing, cleaning, and normalization of AI systems.

Implementing data security measures such as validation (input and output) and sanitization prevents unauthorized access and data leaks. The importance of having such preventive measures in place was shown by an incident involving Nvidia’s AI software, where the attacker manipulated the LLM into leaking its confidential data.

To reduce the risks of data and models being unduly modified, it’s crucial to consider, when applicable, implementing integrity controls such as digital signatures, for data integrity and non-repudiation. Additionally, strong data encryption controls maintain confidentiality across training and production data to ensure that sensitive information remains secure from unauthorized access.

Protecting model components

Key focus areas for the protection of the model of generative AI applications include model validation and filtering (both inputs and outputs), and prevention of model theft. Filtering and processing should also take place during the training stage. Advanced input and output validation techniques should be in place to prevent prompt injection of adversarial inputs. Instances like the exposure of Meta’s LLaMA model highlight filtering’s value during the training phase.

It is equally important to prevent model theft. Employing measures like strong model access control and encryption prevents threats like unauthorized access and model information leakage. Initiatives like Mithril Security’s AICert program tackle the risk of model theft, model security leakage, and supply chain attacks by using secure hardware and trusted platform modules (TPM). This provides model provenance attestation by creation of verifiable certificates linking specific models to their training data. This verifies the model’s origin, helps detect training biases, and protects against malicious code.

Secure Foundations. Resilient GenAI ecosystems.

While specific threats to generative AI applications often capture industry attention, it is even more crucial to address the underlying elements of AI ecosystems components — infrastructure, application, data and model security. These foundational elements, like the larger, unseen part of an iceberg, include most cybersecurity risks. Protecting them helps mitigate inherent threats, even in the absence of specialized defenses against targeted AI-based attacks. This approach not only secures the visible specific aspects of security of generative AI but also the underlying components and platforms.