Today, there are numerous generative AI (GenAI) innovations that have captured the attention of AWS partners and customers. They are eager to understand the applicability of this new technology and explore use cases that are gaining momentum from betas, prototypes, and demos to real-world innovations and productivity gains. When AWS discusses the potentials of generative AI with CIO, CTO, or CISOs, they are excited about the possibilities, but they need security and privacy guardrails built in to drive innovation at scale.

Based on MarketResearch’s study, generative AI in the security market is expected to grow to $2,654M by 2032, growing at a CAGR of 17.9% over the next 10 years.

AWS: For your end-to-end security solutions

Building secure GenAI solutions requires a comprehensive approach. But where to start?

Organizations trust AWS to deliver enterprise-grade security and privacy, choice of leading foundation models, a data-first approach, and the most performant, low-cost infrastructure available to accelerate generative AI-powered innovation. AWS is enabling businesses with AI so they can build new applications that enhance employee productivity, transform customer experiences, and open up new business opportunities.

Privacy and security are top priorities for our customers, who benefit from ongoing investments in secure infrastructure and purpose-built solutions that scale.

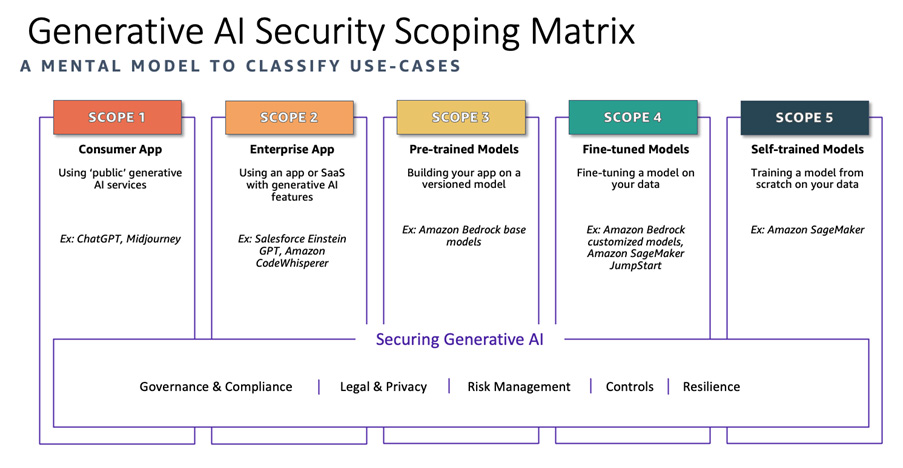

To help our customers build custom generative AI solutions, AWS experts leverage the Generative AI Security Scoping Matrix below so that they can focus on key security disciplines aligned to their use case.

Here are foundational security capabilities to safeguard generative AI solutions:

- Vulnerability management: Specific to AI systems, vulnerabilities can emerge from the input (prompt injection), the actual AI models (data poisoning) or the output (model inversion). Prompt engineering best practices can mitigate prompt injection attacks on modern LLMs.

- Security governance: Understand the AI risks and compliance requirements for your industry and organization. Allocate sufficient security resources for governance and executive-level visibility. To increase visibility and governance here’s how organizations can leverage the AWS Service Catalogue AppRegistry.

- Security assurance: When security and privacy measures are defined, you must validate and demonstrate that they sufficiently meet regulatory and compliance requirements for the AI workloads. They must demonstrate efficient and effective AI risk management, while meeting your business objectives and risk tolerance. AWS Audit Manager provides automated evidence collection that validates controls. Learn more about the service and the collection of pre-built frameworks and generative AI best practices.

- Threat detection: Detection and threat mitigation in AI workloads must cover three critical components: inputs, models, and outputs. They should include threat modelling specific to AI systems and threat hunting. To achieve this, it is critical to implement customized safeguards that align with your AI application requirements and policies, such as AWS’s Guardrails in place for Amazon Bedrock (currently in preview).

- Infrastructure protection: Safeguarding AI workloads necessitates a proactive security posture. As an example, the AI model and Amazon API gateways for rate-limiting model access protect your AI workloads. For detailed guidance refer to the AWS Security Reference Architecture.

- Data protection: It is critical to maintain visibility and secure access and controls over data usage for AI development. Always employ data activity monitoring to detect access patterns by usage and frequency. Avoid using sensitive data to train models, and tag and label data used for training, and align data tags and labels to data classification policies and standards. For more best practices, refer to the AWS Well-Architected Framework -Machine Learning Lens.

- Application security: Vulnerability detection and mitigation needs to cover your AI models. Verify that model developers execute prompt testing locally in your environment. Access and data protection of the models should be built into the solution. To help secure application development, AWS developed Amazon CodeWhisperer – an AI-powered tool that generates code suggestions and scans the code to identify hard-to-find security vulnerabilities. Learn more.

Strategize. Simplify. Scale.

AWS and Eviden partner to modernize the AIsaac Cyber Mesh solution and further enhance the existing AI/ML features with generative AI capabilities. Together, we build closer integration with AWS native security services like Amazon Security Lake and new features to address industry-specific use cases with the AIsaac Cyber Mesh solution.

The AWS Global Partner Security Initiative builds transformational security services with partners to simplify complexity, accelerate with generative AI, and increase cyber resilience across your entire digital estate. AWS recently launched the Generative AI Competency that includes GPSI partners and services.

Get started today

Start your GenAI journey by exploring AWS PartyRock. You can experiment with generative AI applications and create your own – no coding skills required. For more information about AWS security and generative AI services, discover more:

Let’s go build!